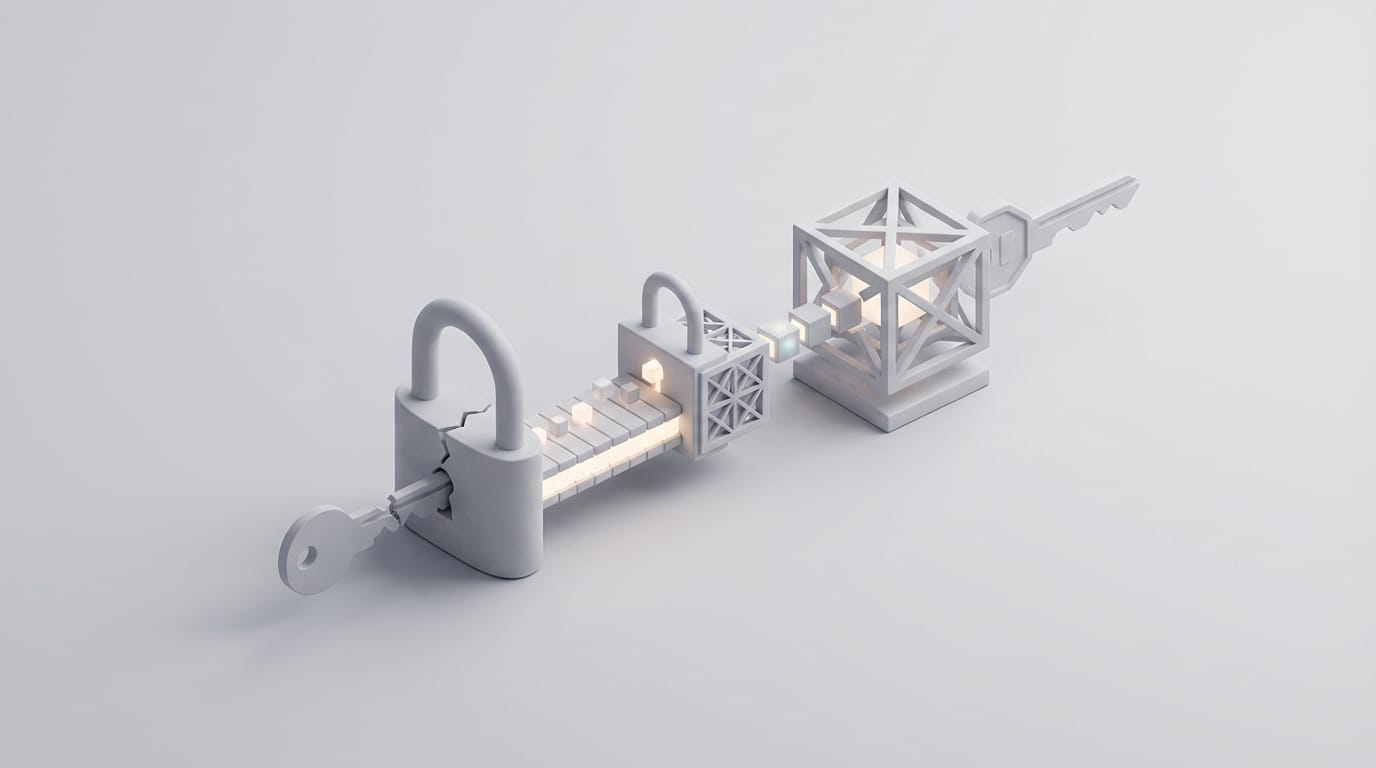

The transition to post-quantum cryptography is not a simple software patch but a fundamental architectural shift. It requires proactive planning to restructure how digital systems establish trust. To protect long-term data, organizations must implement the new post-quantum cryptography standards to mitigate risks from future quantum computers capable of compromising current encryption methods.

The Quantum Threat to Public Key Infrastructure

Current public key infrastructure (PKI) relies on the computational difficulty of specific mathematical problems: integer factorization and discrete logarithms. Algorithms like RSA and Elliptic Curve Cryptography (ECC) have served as the bedrock of digital trust for decades, securing everything from global banking to private messaging. However, these algorithms possess a structural vulnerability. They are susceptible to Shor’s algorithm when executed on a sufficiently powerful quantum computer.

A Cryptographically Relevant Quantum Computer (CRQC) does not merely perform calculations faster than a classical supercomputer. Instead, it approaches mathematical problems through a different computational paradigm. While a classical computer might take billions of years to factor a 2048-bit RSA key by trying combinations, a CRQC uses quantum superposition and interference to identify the period of a function related to the key. Theoretically, a CRQC could accomplish this in hours. This reality necessitates a clear distinction between quantum-resistant systems—those built on mathematical problems quantum computers cannot easily solve—and our current quantum-vulnerable systems.

The immediate risk is often termed “Store Now, Decrypt Later” (SNDL). State actors and sophisticated adversaries are currently harvesting encrypted traffic with the intention of decrypting it once quantum hardware matures. For data with a high “shelf-life” requirement—such as national security secrets, long-term medical records, or intellectual property—the threat is not a future problem. It is a present-day exposure of data longevity. If the data must remain confidential for ten years or more, it is already at risk.

Analysis of Post-Quantum Cryptography Standards

In response to this shift, the National Institute of Standards and Technology (NIST) finalized the initial set of post-quantum cryptography standards in late 2024. These standards define the mathematical frameworks that will replace RSA and ECC across the global digital economy. The primary focus has been on lattice-based cryptography. This family of math involves finding the shortest vector in a high-dimensional grid of points, a problem that remains computationally intensive for both classical and quantum processors. Lattice-based math offers an optimal balance of security, efficiency, and relatively manageable key sizes.

Key-Encapsulation and Digital Signatures

The core standards released include FIPS 203, FIPS 204, and FIPS 205. FIPS 203 specifies ML-KEM (Module-Lattice-Based Key-Encapsulation Mechanism), derived from the CRYSTALS-Kyber algorithm. This is the primary tool for secure key exchange. It allows two parties to establish a shared secret over an insecure channel without an observer being able to intercept or derive it. Because ML-KEM is efficient, it is the designated replacement for Diffie-Hellman and Elliptic Curve Diffie-Hellman (ECDH) in protocols like TLS.

FIPS 204 and FIPS 205 address digital signatures, which verify the identity of a sender and the integrity of the data. ML-DSA (Module-Lattice-Based Digital Signature Algorithm) is the primary recommendation for general-purpose signatures. For environments where a different mathematical foundation is required as a safety measure, SLH-DSA (Stateless Hash-Based Digital Signature Algorithm) provides a fallback. While SLH-DSA results in larger signatures and slower performance, its security is based on hash functions rather than lattices, providing a “hedged” defense if a future breakthrough targets lattice-based math.

Categorization by Security Levels

These algorithms offer different parameter sets categorized by security levels (I, III, and V). Level I is roughly equivalent to AES-128 in terms of quantum computational difficulty. Level III aligns with AES-192, and Level V provides the robustness of AES-256. Most enterprise architectures will likely aim for Level III or higher. This ensures a sufficient safety margin against future advancements in quantum cryptanalysis while managing the performance overhead inherent in higher security tiers.

Mechanics of Hybrid Deployment Models

Moving directly to pure post-quantum algorithms carries inherent risks. These new primitives have not been “battle-tested” in production for decades like RSA. To mitigate the risk of a mathematical flaw in the new algorithms, the industry is adopting hybrid deployment models. In a hybrid setup, a traditional classical key (like ECDH) is combined with a post-quantum key (like ML-KEM) using a Key Derivation Function (KDF) to create a single shared secret.

This “dual-key” approach ensures that the connection remains secure as long as at least one of the underlying algorithms remains unbroken. If a flaw is discovered in the new post-quantum cryptography standards, the classical layer still provides the protection we rely on today. Conversely, if a quantum computer appears, the post-quantum layer provides the necessary defense. This belt-and-suspenders approach is currently the recommended path for the 2026-2030 transition period.

Integrating these hybrids into existing workflows like TLS 1.3 or SSH introduces technical challenges. Post-quantum keys and signatures are significantly larger than their classical counterparts. For example, an ML-KEM-768 public key is roughly 1,184 bytes, compared to just 32 or 64 bytes for common elliptic curve keys. This increase in packet size can lead to fragmentation, where a single handshake no longer fits within a standard Maximum Transmission Unit (MTU). Architects must account for increased latency and potential drops by middleboxes—such as firewalls or load balancers—that may not expect these larger packets or the non-standard fragmentation they cause.

Solving the Double Migration Bottleneck

A common mistake in cryptographic transitions is focusing solely on the algorithm while ignoring the implementation logic. The real operational bottleneck is the “Double Migration.” If you refactor your codebase today to replace RSA with ML-KEM manually, you may find yourself refactoring it again if a more efficient parameter set is standardized or a specific implementation is deprecated. This creates a cycle of technical debt that many organizations cannot afford.

The goal is to move away from hardcoded cryptography. When business logic is tightly coupled with specific cryptographic libraries, every update becomes a high-stakes engineering project. To avoid this, organizations should implement abstraction layers. These layers separate the “what” (encrypt this data) from the “how” (using ML-KEM with specific parameters). This concept, known as cryptographic agility, allows the underlying algorithms to be swapped via configuration rather than code changes.

Building for cryptographic agility involves using provider-based architectures where the application calls a generic interface. By architecting for agility now, you solve the migration problem once. The first migration moves the system to a modular architecture; subsequent updates to follow evolving post-quantum cryptography standards become routine maintenance rather than emergency refactors.

Strategic Roadmap for Enterprise Transition

The first step in a transition roadmap is discovery. Most organizations do not have a comprehensive inventory of where their cryptography lives. This includes web servers, internal microservices, legacy databases, and third-party SaaS integrations. Organizations must identify which data assets have the longest required lifespan and prioritize those for migration. This is often documented through a Cryptographic Bill of Materials (CBOM), which lists every algorithm, key length, and certificate in use across an environment.

Once you have an inventory, begin testing in non-production environments. Modern libraries from providers like OpenSSL and wolfSSL already incorporate post-quantum primitives. Running these in a staging environment reveals how the increased computational overhead and larger key sizes affect your specific application’s performance. For example, a high-frequency trading application might be more sensitive to the increased handshake latency than a standard web application.

Vendor management is equally critical. Your security is only as strong as your weakest link in the supply chain. Inquire with your cloud providers, such as Amazon Web Services or Microsoft Azure, about their roadmaps for PQC support. Aligning your internal transition with regulatory frameworks like the Commercial National Security Algorithm Suite (CNSA 2.0) will help ensure compliance. As of 2026, many federal mandates for quantum resistance have begun to take effect, making vendor alignment a matter of regulatory necessity.

Operational Challenges in PQC Governance

The Certificate Authority (CA) ecosystem is undergoing a significant evolution. Traditional X.509 certificates must grow to accommodate post-quantum public keys and signatures. This requires changes to how certificates are parsed and validated by client software. We expect a period where “hybrid certificates” containing both classical and PQC signatures become the norm. These allow for backward compatibility: older clients see the classical signature and proceed, while quantum-aware clients verify both for enhanced security.

Hardware Security Modules (HSMs) and Trusted Platform Modules (TPMs) present a physical hurdle. Many current hardware roots of trust are optimized for the specific math of RSA or ECC. To support post-quantum cryptography standards, many of these devices will require firmware updates. In cases where the hardware lacks the memory or processing power to handle lattice-based math, complete hardware replacement may be necessary. This is particularly challenging for IoT devices and embedded systems with long lifecycles and limited compute resources.

Finally, governance requires continuous monitoring for “cryptographic drift.” As different teams within an organization migrate at different speeds, you risk a fragmented environment where some secrets are quantum-secure and others remain vulnerable. Centralized policy management tools can help enforce the use of approved PQC suites. These tools ensure that no legacy, vulnerable protocols are reintroduced into the environment during maintenance or scaling operations. Consistent auditing is the only way to ensure the theoretical security of an architecture matches its practical implementation.

“The complexity of migrating to post-quantum standards lies not in the math of the algorithms, but in the brittle nature of the systems we have built around their predecessors.”

Success in this transition is measured by the invisibility of the change. By moving toward a modular, agile architecture today, organizations can implement the necessary protections against quantum threats without incurring the technical debt of a secondary refactor later this decade. The focus must remain on structural integrity and the decoupling of secrets from the logic that uses them.